Introduction

Over $400 billion USD in projected enterprise AI spending over the next decade is chasing systems that are fast, fluent, and confident - yet unreliable when the world changes. Today’s AI excels at recognizing patterns in familiar contexts, but collapses when confronted with unfamiliar situations - a challenge known as the out-of-distribution (OOD) problem. Agents also lack temporal understanding of shifting user needs, making it difficult for them to maintain context over time. This is not a minor technical inconvenience; it’s why Gartner predicts 40% of AI agent projects will be canceled by 2027, despite massive capital inflows.

The market is already signaling where the next breakthrough will be: AI that can reason and adapt to situations it has never seen before. In fact, Yoshua Bengio along with a team of researchers from the leading research labs across the world, concluded in their recent paper 1

What Research Directions Will Improve Chain-of-Thought Faithfulness? Ensuring Causality.

Whoever integrates Causality (also known as Causal Inference, the science of “cause and effect”) in AI Systems will lead the infrastructure for a new generation of intelligent systems - the shift from “predictive parrots” to adaptive intelligence.

The Brittleness Problem

Modern Large Language Models (LLMs) and Reinforcement Learning (RL) systems are built for prediction, not understanding.

- LLMs predict the next word in a sentence, prioritizing linguistic fluency over factual accuracy. They can sound right while being dangerously wrong.

- RL systems learn by maximizing rewards in a fixed environment - great for a video game or warehouse robot, but useless when the rules change. Both approaches depend on the world looking like the training data. Once the environment shifts (a market disruption, a new regulation, a novel supply chain failure), these systems have no internal model of why events happen, so they cannot adapt intelligently.

This isn’t just limited to models, but all downstream applications like AI Agents. LLMOps fixes (i.e. more compute at inference, agent orchestration, retrieval-augmented generation) help at the margins, but do not give the AI the ability to reason about cause and effect. Without cause-effect-reasoning, enterprises are stuck with reactive, brittle tools.

Our Solution: Causal Reflection

We propose a temporal causal framework that reorients AI from chasing correlations to understanding what causes what, and when. Instead of asking an AI to “guess the next move,” we make it model:

- The sequence of events, what happened, in what order, and under what conditions.

- The delays and ripple effects, how a small change now might trigger a big effect later.

- The non-linear dynamics, why two similar situations can have very different outcomes.

We call this Causal Reflection because the system doesn’t just act, but also reflects on its actions, generates hypotheses about why outcomes occurred, and tests those hypotheses through counterfactual reasoning (“what if we had acted differently?”).

When integrated with LLMs, this framework transforms them from black-box predictors into explainable causal engines, capable of simulating outcomes, anticipating failures, and adapting strategies without retraining.

Our Vision

We believe time will become the next core AI modality, alongside text, vision, and audio.

By treating time as a primary dimension of intelligence, not just an incidental variable, we can build systems that reason about the unfolding world as naturally as they parse a sentence.

In the coming decade, businesses will demand AI that can operate beyond the training set, explain its reasoning, and adapt like an experienced knowledge worker.

Key Insight: Causal Reflection is the infrastructure to make that possible.

Architecture is the Problem

Transformers, the architecture powering today’s LLMs, were built for next-word prediction, not reasoning. They are optimized for linguistic fluency, not truth or logical consistency. Even when given instructions to “reason step-by-step,” they collapse on multi-step problems as complexity grows (as shown in Apple’s viral Illusion of Thinking 2 paper). Their attention mechanism scales poorly with longer sequences, making it expensive and unstable to track extended cause-effect chains.

The result? LLMs can sound persuasive while making errors that a first-year analyst would avoid, especially in multi-step, unfamiliar scenarios. This can be extremely critical in AI agent workflows where even a small error early on can cascade into total task failure.

Test-time compute (i.e. allocating more processing during inference) and RAG (Retrieval Augmented Generation) can help at the margins but do not solve the underlying issue that AI still lacks an internal model of causality to guide decisions in the unfamiliar.

What does it mean for businesses?

The enterprise AI market has heavily invested in retrieval infrastructure systems that pull the right facts into an LLM. This is a $4B+ market. But retrieval solves only half the problem. AI can find relevant data but it still cannot reliably decide what to do with that data in a dynamic context.

The real value lies in the much larger “decision-making” problem i.e. the tens of trillions of annual decisions made by knowledge workers, the majority of which fall outside an AI’s training distribution. Unlocking this space is a 10x bigger opportunity than retrieval alone.

Without solving OOD decision-making, enterprise AI will remain trapped in the “demo phase”. Impressive in controlled tests, but too unreliable for high-stakes, dynamic environments.

The next wave of AI will not just predict. It will reason. This requires:

- A causal understanding of the environment.

- Temporal awareness i.e. the ability to reason about sequences, delays, and ripple effects.

- Self-reflection i.e. learning from one’s own actions without retraining from scratch.

This is not an incremental improvement. It’s a paradigm shift from reactive pattern-matching to proactive decision-making under uncertainty. Whoever builds this infrastructure will define the competitive standard for enterprise AI in the 2030s.

Causal Reflection

Our approach, Causal Reflection3, is a fundamental rethinking of how AI models perceive, act, and learn over time. Instead of treating actions and events as isolated data points, we model them as part of a living timeline: a chain of causes and effects unfolding in a specific sequence, under specific conditions, and often with delays between action and consequence.

Causal Reflection Workflow

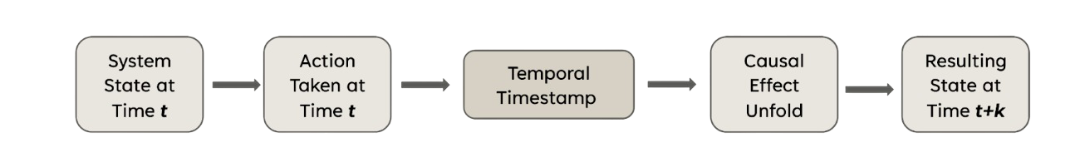

At the heart of this approach is what we call the temporal causal function. It doesn’t just statistically map the inputs to outputs but it is a structured model of how a given state, combined with a given action at a given moment, leads to a future state - even far downstream.

This explicitly encodes the fact that many of the most important business outcomes are delayed, indirect, and shaped by multiple interacting factors. A minor policy change today may cause a subtle shift in customer behavior months later, which in turn could trigger a major competitive response. Without temporal causal awareness an AI system cannot anticipate or prepare for these chain reactions.

Causal Reflection builds this temporal awareness into the core of the AI’s reasoning. It tracks event sequences, understands the order and timing in which they occurred, and recognizes that causes must precede effects. It can model delayed outcomes - not just what happens immediately after an action, but what may happen days, weeks, or quarters later.

Additionally, it accounts for nonlinear dynamics, where two seemingly similar situations can produce vastly different outcomes due to small differences in the initial conditions. We formalize this with a perturbation factor that captures the chaotic tendencies of real-world systems, making the AI robust to the unpredictable twists of dynamic environments.

Where Causal Reflection departs most dramatically from current methods is in its self-reflection loop. The system is not just executing instructions but is observing the downstream consequences of its actions, comparing them to expectations, and adjusting its understanding of the causal structure of the world accordingly. This is how it learns without retraining from scratch. Instead of being trapped in the static statistical patterns of its initial training, it actively refines its model of “how the world works” based on real-world feedback.

When integrated with Large Language Models, Causal Reflection transforms them from eloquent but opaque predictors into explainable causal engines. At the architecture level, the LLM is driven by a causal graph that can be interrogated, simulated, and communicated in human-readable terms. This means that a business leader can not only receive a recommendation, but also ask “Why?” and get an explanation grounded in cause-and-effect reasoning complete with alternative scenarios (“what if we had acted differently?”) and forecasts of possible futures.

This integration also enables synthetic environment generation, where the AI can test its hypotheses in simulation before acting in the real world. In sectors where the cost of error is high, the ability to pre-run scenarios in a causal simulation is transformative. It shifts AI from purely probabilistic to proactively guardrailing, allowing organizations to seize opportunities and avert risks.

Perhaps most importantly, Causal Reflection provides a new pathway to human alignment. Rather than relying solely on reinforcement learning with human feedback (commonly known as RLHF), our framework enables the AI to hypothesize about the intent behind human goals and to test those hypotheses against observed preferences over time. This results in systems that can adapt to evolving human needs, anticipate misalignments before they cause damage, and adjust their behavior in ways that remain consistent with the operator’s underlying objectives.

The combination of temporal awareness, self-reflection, and causal reasoning creates a category of AI that is fundamentally different from today’s dominant architectures. It is not a passive statistical mirror of past data, nor an overconfident pattern matcher. It is an active, adaptive, reasoning system capable of understanding and navigating complexity similar to an experienced knowledge worker. This is the capability gap that must be closed before AI can move from controlled experiments into the unpredictable realities of critical business decision-making.

Time as a Modality

So far, AI has evolved in waves defined by the types of information it can process well. The first wave mastered static text. The second, images. The third, audio and speech. Today’s breakthrough models can handle all three, sometimes together, but they still treat each as an isolated channel. They lack a unifying principle that ties together how events unfold in the real world.

The next great leap will come when AI treats time itself as a first-class modality. Time is not simply another data feature but it is “the dimension” in which every decision, every event, every cause-and-effect chain plays out. Whether it’s a financial market reacting to geopolitical news, a viral trend building and fading on social media, or a patient’s health shifting in response to treatment, the temporal structure of events is what gives them meaning. Without it, AI is blind to the real shape of the world.

Our framework is built around this insight. By elevating time to a core modality, AI can go beyond seeing the world as a collection of disconnected snapshots and instead understand it as a continuous, evolving system. This changes the nature of machine intelligence in three fundamental ways.

First, it enables true causal reasoning at scale. Once time is treated as a primary input, a AI Agent can reason about sequences, delays, and feedback loops with the same fluency currently applied to words and images. It can anticipate outcomes before they happen because it understands the temporal pathways that lead there.

Second, it enables adaptive decision-making in the face of novelty. In a purely statistical model, novelty is an error, even if that pattern is irrelevant. In a time-aware causal model, novelty is simply a new branch in the unfolding causal graph. The system can place it in context, simulate downstream effects, and respond strategically without having seen that exact scenario before.

Third, it opens the door to a unified intelligence architecture where text, images, numbers, and sensor readings are all understood as events embedded in time. This collapses the artificial boundary between “language tasks” and “planning tasks” or between “visual perception” and “logistics optimization.” In a temporal framework, these are all just different slices of the same evolving system.

What’s Next?

The strategic implication is clear. In the coming decade, the winners in enterprise AI will not be those who simply have the largest models or the most data. They will be the ones who can harness time as a modality - building systems that think in cause and effect, adapt in real time, and explain their reasoning to human operators. That capability will be the foundation for AI in high-value, high-risk environments. Causal Reflection is our blueprint for that future.

Our long-term vision is for Causal Reflection to become a core part of how enterprises use AI. Moreover, there will be a standard reasoning layer for enterprise AI, much as GPUs became the default compute layer for machine learning past the CPU bottleneck. We believe that within five years, time-aware causal reasoning will be as fundamental to AI architectures as attention mechanisms are today. Our goal is to help guide how this capability is developed, deployed, and scaled in practice.

For AI engineers and research scientists, this timing is critical. The market is at the peak of LLM hype, but enterprises are already discovering the limitations of these systems in real-world deployment. As this pendulum swings toward reliability, adaptability, and explainability, the companies positioned with causal intelligence will define the next era of AI infrastructure.

Causal Reflection is more than a research project - it’s a step toward building AI systems that can reason about the world as something dynamic, uncertain, and constantly unfolding over time. Developing this foundation will not only contribute to today’s AI progress, but also help shape how the field evolves in the years ahead.